Permission-Based Grounding in Microsoft Copilot

July 10, 2025

Microsoft Copilot is the AI assistant in Microsoft 365 and the Office apps. It helps generating documents, formulating e-mails, supports with advanced tasks and helps retrieving knowledge. When it comes to knowledge retrieval the commercial offering of M365 Copilot is able to find information from SharePoint, teams and also from recorded meetings.

It also offers connecting third-party sources through Microsoft Graph Connectors. Microsoft itself offers many out of the box connectors. And RheinInsights augments this offering by highly scalable feature complete enterprise search connectors. All of our connectors in particular bring secure search and permission based grounding for the M365 Copilot.

Please note that the free version of M365 is not able to retrieve organizational knowledge as of the time writing.

What does Permission-Based Grounding in Copilot Mean?

Organizational knowledge lives in enterprise repositories, such as Teams, SharePoint, Atlassian Confluence, Zendesk, ServiceNow or SAP. All of these sources come with fine-granular access controls to all content. Users must login and are only allowed to see portions of all content. Generally employees and team members are not allowed to access all of the content in an IT system.

How Does Copilot and Other AI-tools Generate Answers?

Copilot is a product which heavily uses an underlying large language model (LLM), such as GPT 4o. So there are two ways for the software to generate answers.

Either though the LLM itself or

Through retrieval augmented generation, with a search engine.

In more detail this means that if the answer is common knowledge and that it can be found hundreds of times in the training corpus for the LLM. Then the LLM itself predicts the right answer.

Or the implementation forwards the question to a search engine, the search engine produces results and you use the LLM to summarize the answer. This is called retrieval augmented generation (RAG) or grounding

For more details on the grounding or RAG approach, see for instance our blog post https://www.rheininsights.com/blog/en/Retrieval+Augmented+Generation+with+Azure+AI+Search+and+Atlassian+Confluence.php.

Why is a Lack of Permissions a Problem for Grounding?

For Copilot this means that during the grounding step it uses Microsoft Search to generate an answer on internal knowledge. For Microsoft Teams, Exchange (Outlook) and SharePoint, Microsoft Search comes with a full understanding of the permissions. Other internal knowledge comes from IT systems which you index separately into Microsoft Search.

But, as pointed out not all users are allowed to access all knowledge. If you simply index all knowledge without taking care of permissions, also read-protected documents will be used by Copilot or other bots to generate an answer to a stated question. This means that the answer can likely includes knowledge which the user does not have access to. So you end up with a severe data loss in the worst case.

Common examples are: “Tell me about recent or planned layoffs”, “Do you have information on our salaries”

How to Implement Permissions in Copilot?

Since the beginning, Graph Connectors and Microsoft Search support indexing fine-granular permissions with each and every indexed document. The good news is that these permissions, so called access control lists (ACLs), are always taken into account when searching. So either in Microsoft Search itself or in Microsoft Copilot.

Thus, all RheinInsights Connectors use these APIs to map the permission model of the content sources to Microsoft Search and Copilot. In turn, users can only find knowledge, they are allowed to access in the underlying knowledge and content sources.

The same approach holds true for your own custom connectors. So how does this technically work?

Technical Approach

Indexing API

The relevant APIs for indexing are

PUT /external/connections/{connection-id}/items/{item-id}

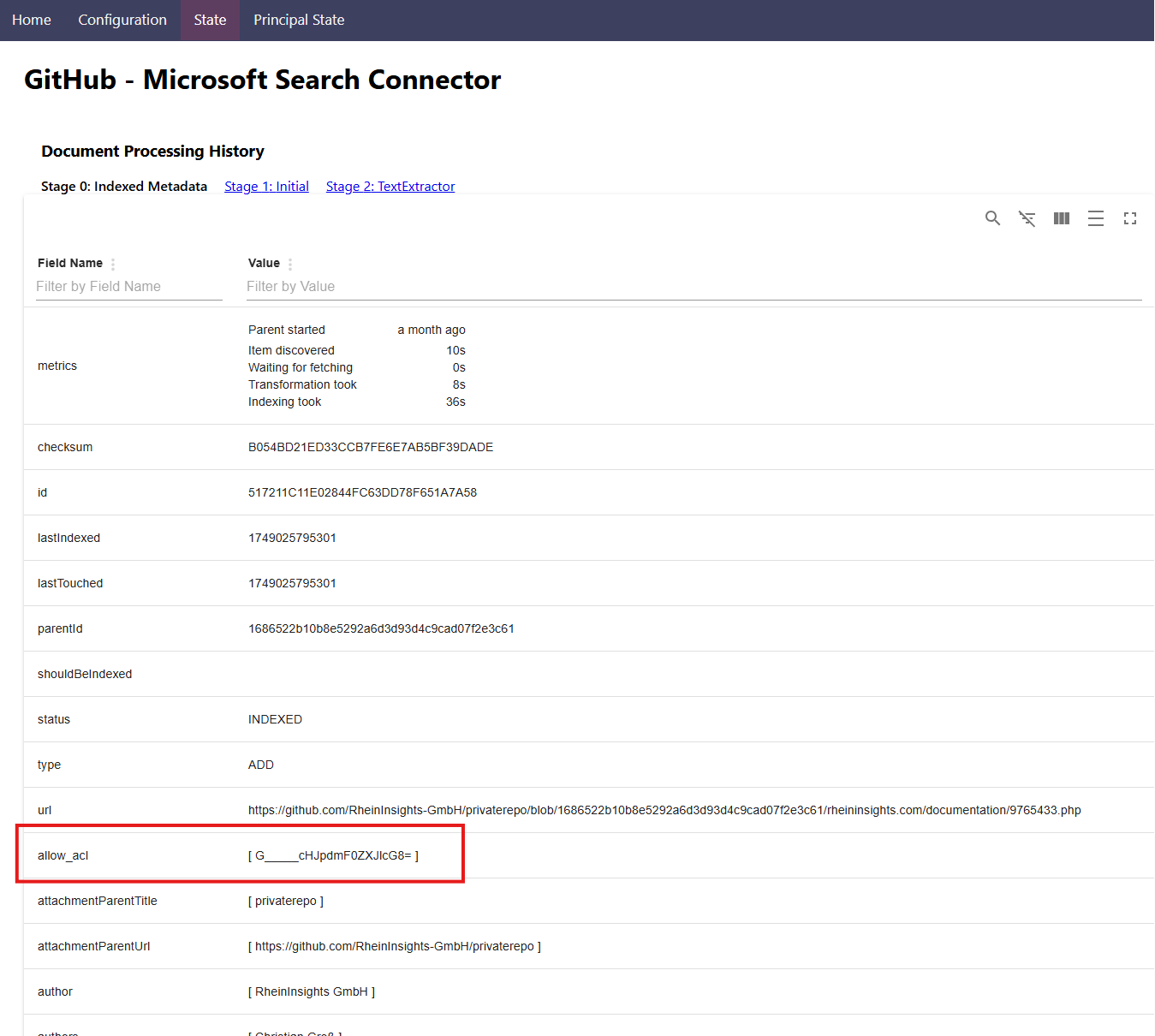

where the external item comprises a separate field, called “acl”, our access control list. This is more or less a set of strings where you can tell Microsoft Search who has access to a document. Here you can in particular add an externalGroup, i.e., one or more custom groups, as entries. The group names or IDs can be freely chosen in the value field. And such groups do not necessarily live in Entra Id.

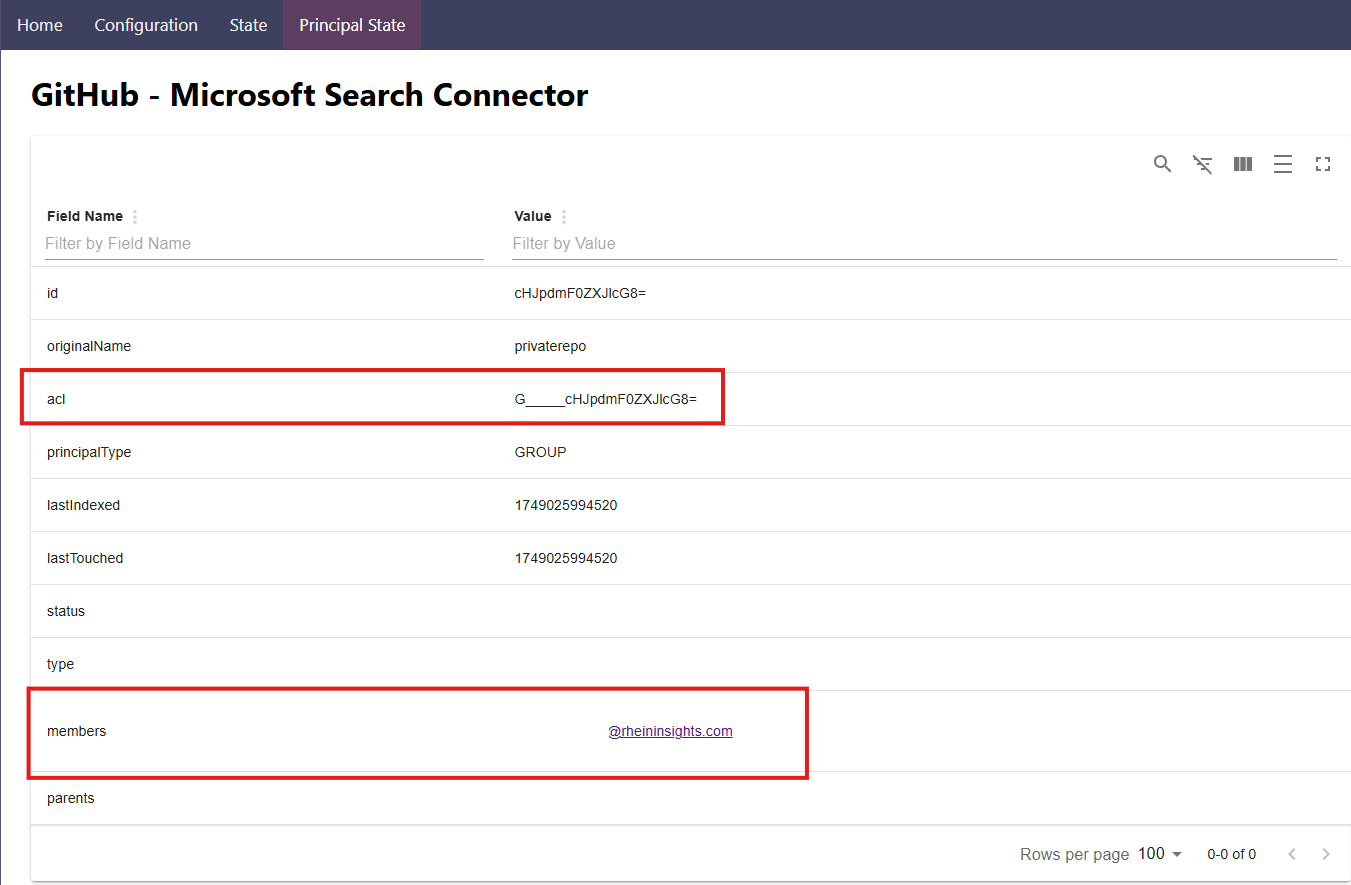

Synchronizing User-Group Relationships

If you decided to index external groups then Microsoft Search (and Copilot) does not know who is a member. So you need to also tell it the user-group relationships. So you need to relate the members of the external groups to Entra Id users. This works through first formally creating the group

POST https://graph.microsoft.com/v1.0/external/connections/{connection-id}/groups

and then by sending the members

POST /external/connections/{connectionsId}/groups/{externalGroupId}/members

Here you usually add Entra Id users with type user and value as the Entra user id.

It is worth noting that you can add group names to acl fields through the /items-API which have not yet been created through the /groups-API.

Please note that it usually takes several hours for Microsoft Search to synchronize the most recent changes in user-group relationships from the data fed in this API to where query processing takes place.

The Result

In turn, at query time Microsoft Search will generate a filter which acts on the ACL field along with the original query

query = (rheininsights) AND (acl:"user@company.org" OR acl:"group1" ...)

So users will only find knowledge they also have access to in the content source. For more information on the general secure search approach, see also our blog post https://www.rheininsights.com/blog/en/Permission-Based+Retrieval+Augmented+Generation+RAG.php.

What Impact Does This Have For Copilot?

For many sources, vendors simply let administrators decide if the entire indexed source is exposed to all company or user groups. But this approach does not scale at all. But in general organizations want to index data once, as configuring a connector and indexing takes time and blocks IT admins from more important tasks. So why index one and the same source multiple times?

Also the approach for Copilot and Copilot studio fosters that users should be able to freely create their own agents and share them. But this tends to become highly complex and also inefficient if you do not have fine-granular and exact safeguards to prevent Copilot exposing protected content.

The solution to both problems is to use secure search connectors. Connectors which make sure that the content source’s permission model is reflected in Copilot. In turn this prevents the oversharing scenario and allows for easily sharing all agents across the whole organization.